Why using goquery ? The web page (https://tools.ietf.org/rfc/rfc%d.txt) is pure text. It doesn’t contain html.

There is no need for goquery.

Also, I don’t understand “… and then parses the data to a csv file”. What must you do exactly ? Do you mean writing ? What data would you want to write to the csv file ? The 20 most frequent words ?

Here is the code to count words with more than 3 letters. It’s more efficient to provide the code than to explain the algorithm. Note that it will consider a number as a word. Remove || unicode.IsNumber(c) if you want to consider words with letters only.

func countWordsIn(text string) map[string]int {

var wordBegPos, runeCount int

wordCounts := make(map[string]int)

for i, c := range text {

if unicode.IsLetter(c) || unicode.IsNumber(c) {

if runeCount == 0 {

wordBegPos = i

}

runeCount++

continue

}

if runeCount > 3 {

word := text[wordBegPos:i]

count := wordCounts[word] // return 0 if word is not in wordCounts

count++

wordCounts[word] = count

}

runeCount = 0

}

return wordCounts

}

If you have to accumulate the word counts of different RFC text files, you need to accumulate the maps. You do it like this:

var totalWordCounts = make(map[string]int)

func accumulateWordCounts(wordCounts map[string]int) {

for key, value := range wordCounts {

totalWordCounts[key] = totalWordCounts[key] + value

}

}

To get the 20 most frequent words, you could copy all word-count pairs in a slice, sort it in decreasing count order and return the first 20 word-count pairs.

A more efficient method is to use a heap ( import "container/heap"). A heap is a slice with the smallest or biggest value at index position 0. It will be the smallest or biggest depending on the comparison function provided. Here, you would only need a heap of 20 word-count pairs with the word with smallest count at index position 0 (the top of the heap). You then iterate over all word-count pairs in the map, and if the count is bigger than the count of the heap top, you replace the word-count pair at index position 0 with the word-count pair from the map. After that you call heap.Fix(h, 0) to get the new smallest word-count value at the top.

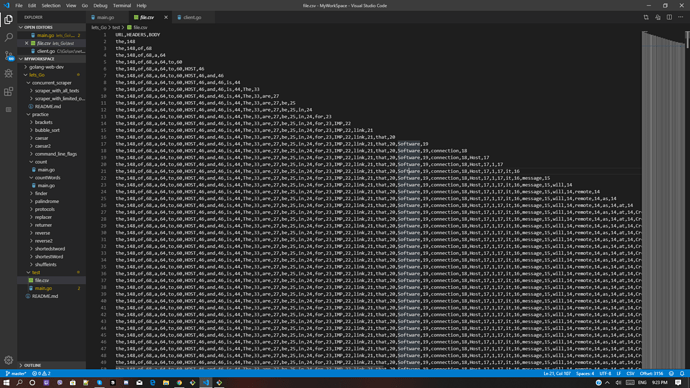

Here is the code I had some fun to implement.

package main

import (

"container/heap"

"fmt"

"sort"

)

type elem struct {

word string

count int

}

type elemHeap []elem

func (h elemHeap) Len() int { return len(h) }

func (h elemHeap) Less(i, j int) bool { return h[i].count < h[j].count }

func (h elemHeap) Swap(i, j int) { h[i], h[j] = h[j], h[i] }

func (h elemHeap) Push(x interface{}) { /* not used */ }

func (h elemHeap) Pop() interface{} { /* not used */ return nil }

func mostFrequentWords(m map[string]int, nbrWords int) []elem {

h := elemHeap(make([]elem, nbrWords))

for word, count := range m {

if count > h[0].count {

h[0] = elem{word: word, count: count}

heap.Fix(h, 0)

}

}

sort.Slice(h, func(i, j int) bool { return h[i].count > h[j].count })

return h

}

func main() {

m := map[string]int{"the": 123, "this": 29, "house": 4, "hold": 8, "then": 27}

r := mostFrequentWords(m, 3)

for i := range r {

fmt.Println(r[i])

}

}